Labels

- About Us (1)

- Announcement (1)

- Automation (1)

- Communications (1)

- Current Transducer (1)

- Education (5)

- Electronics (1)

- Flowmeters (2)

- Fluid Technology (8)

- Formula (1)

- Generators (1)

- HART (2)

- Industrial Ethernet (1)

- Infrared Technology (1)

- Instrumentation (5)

- Level Measurement (3)

- Level Sensors (1)

- Load Cells (3)

- Motor Controls (2)

- Networking (1)

- PC Interfacing (1)

- PLCs (1)

- Power Supplies (1)

- Process Controls (1)

- Pyrometers (2)

- Repair (1)

- Strain Gauges (3)

- Temperature (1)

- thermocouples (1)

- Transducers (6)

- Transmitters (1)

Announcement

Pages - Menu

About

Motors and Drives Basics

The venerable electric motor that was the muscle of the industrial revolution is becoming the smart muscle of the computer-controlled plant and commercial facility of the future. The advent of powerful, reliable electronic drives is keeping motors in the forefront of this technological evolution.

Electric motors have a tremendous impact on overall energy use. Between 30 to 40 percent of all fossil fuels burned are used to generate electricity, and two-thirds of that electricity is converted by motors into mechanical energy.

The Fundamentals of Energy Management series this month will focus on topics that will allow facility managers and engineers at commercial and industrial facilities to understand the basics of motors and drives. This information will help them to select and implement strategies with the goal of reducing motor and drive costs as well as decreasing downtime.

AC Induction Motors

AC induction motors are ideal for most industrial and commercial applications because of their simple construction and low number of parts, which reduce maintenance cost. Induction motors are frequently used for both constant-speed and adjustable speed drive (ASD) applications.

The two basic parts of an induction motor are the stationary stator located in the motor frame and the rotor that is free to rotate with the motor shaft. Today's motor design and construction are highly refined. For example, stator and rotor laminations have been designed to achieve maximum magnetic density with minimum core losses and heating. The basic simplicity of this design ensures high efficiency and makes them easily adaptable to a variety of shapes and enclosures.

A three-phase induction motor can best be understood by examining the three-phase voltage source that powers the motor. Three-phase currents flowing in the motor leads establish a rotating magnetic field in the stator coils. This magnetic field continuously pulsates across the air gap and into the rotor. As magnetic flux cuts across the rotor bars, a voltage is induced in them, much as a voltage is induced in the secondary winding of a transformer. Because the rotor bars are part of a closed circuit (including the end rings), a current begins to circulate in them. The rotor current in turn produces a magnetic field that interacts with the magnetic field of the stator. Since this field is rotating and magnetically interlocked with the rotor, the rotor is dragged around with the stator field.

When there is no mechanical load on the motor shaft (no-load condition), the rotor almost manages to keep up with the synchronous speed of the rotating magnetic field in the stator coils. Drag from bearing friction and air resistance prevents perfect synchronicity. As the load increases on the motor shaft, the actual speed of the rotor tends to fall further behind the speed of the rotating magnetic field in the stator. This difference in speed causes more magnetic lines to be cut, resulting in more torque being developed in the rotor and delivered to the shaft mechanical load. The rotor always turns at the exact speed necessary to produce the torque required to meet the load placed on the motor shaft at that moment in time. This is usually a dynamic situation, with the motor shaft speed constantly changing slightly to accommodate minor variations in load.

The rotor consists of copper or aluminum bars connected together at the ends with heavy rings. The construction is similar to that of a squirrel cage, a term often used to describe this type of ac induction motor.

The rotating magnetic field in the stator coils, in addition to inducing voltages in the rotor bars, also induces voltages in the stator and rotor cores. The voltages in these cores cause small currents, called eddy currents, to flow. The eddy currents serve no useful purpose and result in wasted power. To keep these currents to a minimum, the stator and rotor cores are made of thin steel discs called laminations. These laminations are coated with insulating varnish and then edge welded together to form a core. This type of core construction substantially reduces eddy current losses, but does not entirely eliminate them.

By varying the design of the basic squirrel-cage motor, almost any characteristic of speed, torque, and voltage can be controlled by the designer. To standardize motor features the National Electrical Manufacturers Association (NEMA) has established standards for a number of motor features.

The speed of an ac induction motor depends on the frequency of the supply voltage and the number of poles for which the motor is wound. The term poles refers to the manner in which the stator coils are connected to the three incoming power leads to create the desired rotating magnetic field. Motors are always wound with an even number of poles. The higher the input frequency, the faster the motor runs. The more poles a motor has, the slower it runs at a given input frequency. The synchronous speed of an ac induction motor is the speed at which the stator magnetic flux rotates around the stator core at the air gap. At 60 Hz the following synchronous speeds are obtained:

Number of poles RPM

2 3,600

4 1,800

6 1,200

8 900

10 720

12 600

Providing the motor is properly constructed, the output speed can be doubled for a given number of poles by running an ASD supplying the motor at an output frequency of 120 Hz.

The actual speed of an induction motor rotor and shaft is always somewhat less than its synchronous speed. The difference between the synchronous and actual speed is called slip. If the rotor rotated as fast as the stator magnetic field, the rotor conductor bars would appear to be standing still with respect to the rotating field. There would be no voltage induced in the rotor bars and no current would be set up to produce torque.

Induction motors are made with slip ranging from less than 5% up to 20%. A motor with a slip of 5% or less is known as a normal-slip motor. A normal-slip motor is sometimes referred to as a 'constant speed' motor because the speed changes very little from no-load to full-load conditions. A common four-pole motor with a synchronous speed of 1,800 rpm may have a no-load speed of 1,795 rpm and a full-load speed of 1,750 rpm. The rate-of-change of slip is approximately linear from 10% to 110% load, when all other factors such as temperature and voltage are held constant. Motors with slip over 5% are used for hard to start applications.

The direction of rotation of a poly-phase ac induction motor depends on the connection of the stator leads to the power lines. Interchanging any two input leads reverses rotation.

Torque and Horsepower

Torque and horsepower are two very important characteristics that determine the size of the motor for a particular application. Torque is the turning effort. For example, suppose a grinding wheel with a crank arm one-foot long takes a force of one pound to turn the wheel at steady rate. The torque required is one pound times one foot or one foot-pound. If the crank is turned twice as fast, the torque remains the same. Regardless of how fast the crank is turned, the torque is unchanged as long as the crank is turned at a steady speed.

Horsepower takes into account how fast the crank is turned. Turning the crank more rapidly takes more horsepower than turning the crank slowly. Horsepower is the rate of doing work. By definition, one horsepower equals 33,000 foot-pounds per minute. In other words, to lift a 33,000-pound load one foot in one minute would require one horsepower.

The only way to improve motor efficiency is to reduce motor losses. Since motor losses produce heat, reducing losses not only saves energy directly but can also reduce cooling load on a facility's air conditioning system.

Motor energy losses can be segregated into five major areas. Each area is influenced by the motor manufacturer's design and construction decisions. One design consideration, for example, is the size of the air gap between the rotor and the stator. Large air gaps tend to maximize efficiency at the expense of a lower power factor. Small air gaps slightly compromise efficiency while significantly improving power factor.

Motor losses may be grouped as fixed or variable losses. Fixed losses occur whenever the motor is energized and remain constant for any given voltage and speed. Variable losses increase with an increase in motor load. Core loss and friction windage losses are fixed. Variable losses include stator- and rotor-resistance losses and stray load losses.

Motor Economics

The principal factors in energy-saving calculations are motor efficiency, run hours (at a certain load), and the cost of electricity. When a motor runs at nearly full load for many hours at a facility with high electrical costs, the higher resulting savings will indicate the use of a 'premium efficiency' unit. In some cases, the savings may be great enough to warrant taking a perfectly serviceable older motor off-line and upgrading to a new, premium-efficiency model. For applications with less than continuous use or at lower than full loading, upgrading a working motor will usually not make sense.

Commercial and industrial firms today use adjustable-speed drive (ASD) systems for a variety of applications. Most common of these include standard pumps, fans, and blowers. Newer applications include hoists and cranes, conveyors, machine tools, film lines, extruders, and textile-fiber spinning machines.

Many applications have unique demands and characteristics.

Drive vendors have responded to this demand by producing a variety of drives. The combination of the many types of drives available and the abundance of applications has made the selection of the optimum drive for a given application a challenge.

New generation ASDs have evolved with advancements in solid-state electronics. ASDs can now be applied to ac motors regardless of motor horsepower or location within a facility and can be used to drive almost all types of motorized equipment, from a small fan to the largest extruder or machine tool. Commercial and industrial facilities can expect to dramatically reduce both energy consumption and operating and maintenance costs while offering improved operating conditions by using new generation electronic ASDs. The latest generation of ASDs allows ac induction motors to be just as controllable and efficient as their dc counterparts were.

Historically a variety of terms have been used to describe a system that permits a mechanical load to be driven at user-selected speeds. These terms include, but are not limited to:

Variable-Frequency Drive

Adjustable-Frequency Drive

Adjustable-Speed Drive

Basic ASD Components

Most ASD units consist of three basic parts. A rectifier that converts the fixed frequency ac input voltage to dc. An inverter that switches the rectified dc voltage to an adjustable frequency ac output voltage. (The inverter may also control output current flow, if desired.) The dc link connects the rectifier to the inverter. A set of controls directs the rectifier and inverter to produce the desired ac frequency and voltage to meet the needs of the ASD system at any moment in time.

The advantages of ASDs do not stop with saving energy and improving control. ASD technology can now be applied to manufacturing equipment previously considered too expensive or uneconomical. Such applications are often unique to a particular industry and its equipment, or even to a particular facility. Cost benefits, such as those obtained from improved quality, may be desirable for each application.

ELECTRIC MOTOR CONTROLS Part 1

Motor Control Topics

There are four major motor control topics or categories to consider. Each of these has several subcategories and sometimes the subcategories overlap to some extent. Certain pieces of motor control equipment can accomplish multiple functions from each of the topics or categories.

The four categories include:

1) Starting the Motor

Disconnecting Means

Across the Line Starting

Reduced Voltage Starting

2) Motor Protection

Overcurrent Protection

Overload Protection

Other Protection (voltage, phase, etc)

Environment

3) Stopping the Motor

Coasting

Electrical Braking

Mechanical Braking

4) Motor Operational Control

Speed Control

Reversing

Jogging

Sequence Control

• An understanding of each of these areas is necessary to effectively apply motor control principles and equipment to effectively operate and protect a motor.

Motor Controller

A motor controller is the actual device that energizes and de-energizes the circuit to the motor so

that it can start and stop.

• Motor controllers may include some or all of the following motor control functions: starting, stopping, over-current protection, overload protection, reversing, speed changing, jogging, plugging, sequence control, and pilot light indication.

Controllers range from simple to complex and can provide control for one motor, groups of motors, or auxiliary equipment such as brakes, clutches, solenoids, heaters, or other signals.

Motor Starter

The starting mechanism that energizes the circuit to an induction motor is called the “starter” and must supply the motor with sufficient current to provide adequate starting torque under worst case line voltage and load conditions when the motor is energized.

• There are several different types of equipment suitable for use as “motor starters” but only two types of starting methods for induction motors:

1. Across the Line Starting

2. Reduced Voltage Starting

Across the Line Starting of Motors

Across the Line starting connects the motor windings/terminals directly to the circuit voltage “across the line” for a “full voltage start”.

• This is the simplest method of starting a

motor. (And usually the least expensive).

• Motors connected across the line are capable

of drawing full in-rush current and

developing maximum starting torque to

accelerate the load to speed in the shortest

possible time.

• All NEMA induction motors up to 200

horsepower, and many larger ones, can withstand full voltage starts. (The electric

distribution system or processing operation may not though, even if the motor will).

Across the Line Starters

Figure 26. Manual Starter

There are two different types of common “across the line” starters including

1. Manual Motor Starters

2. Magnetic Motor Starters

Manual Motor Starters

A manual motor starter is package consisting of a horsepower rated switch with one set of contacts for each phase and corresponding thermal overload devices to provide motor overload protection.

• The main advantage of a manual motor starter is lower cost than a magnetic motor starter with equivalent motor protection but less motor control capability.

• Manual motor starters are often used for smaller motors - typically fractional horsepower

motors but the National Electrical Code allows their use up to 10 Horsepower.

• Since the switch contacts remain closed if power is removed from the circuit without

operating the switch, the motor restarts when power is reapplied which can be a safety

concern.

• They do not allow the use of remote control or auxiliary control equipment like a magnetic

starter does.

Magnetic Motor Starters

A magnetic motor starter is a package consisting of a contactor capable of opening and closing a set

Figure 27. Magnetic Starter

of contacts that energize and de-energize the circuit to the motor along with additional motor

overload protection equipment.

Magnetic starters are used with larger motors (required above 10 horsepower) or where

greater motor control is desired.

• The main element of the magnetic motor starter is the contactor, a set of contacts operated by

an electromagnetic coil.

Energizing the coil causes the contacts (A) to close allowing large currents to be

initiated and interrupted by a smaller voltage control signal.

The control voltage need not be the same as the motor supply voltage and is often low

voltage allowing start/stop controls to be located remotely from the power circuit.

• Closing the Start button contact energizes the contactor coil. An auxiliary contact on the

contactor is wired to seal in the coil circuit. The contactor de-energizes if the control circuit

is interrupted, the Stop button is operated, or if power is lost.

• The overload contacts are arranged so an overload trip on any phase will cause the contactor

to open and de-energize all phases.

Reduced Voltage Starting of Motors

Reduced Voltage Starting connects the motor windings/terminals at lower than normal line voltage

during the initial starting period to reduce the inrush current when the motor starts.

• Reduced voltage starting may be required when:

S The current in-rush form the motor starting adversely affects the voltage drop on the

electrical system.

S needed to reduce the mechanical “starting shock” on drive-lines and equipment when

the motor starts.

• Reducing the voltage reduces the current in-rush to the motor and also reduces the starting

torque available when the motor starts.

• All NEMA induction motors can will accept reduced voltage starting however it may notprovide enough starting torque in some situations to drive certain specific loads.

If the driven load or the power distribution system cannot accept a full voltage start, some type of reduced voltage or "soft" starting scheme must be used.

• Typical reduced voltage starter types include:

1. Solid State (Electronic) Starters

2. Primary Resistance Starters

3. Autotransformer Starters

4. Part Winding Starters

5. Wye-Delta Starters

Reduced voltage starters can only be used where low starting torque is acceptable or a means exists to remove the load from the motor or application before it is stopped.

What is Industrial Ethernet?

The Ethernet network is a local-area network (LAN) protocol developed by Xerox Corporation in cooperation with DEC and Intel in 1976. Ethernet uses a bus or star topology, and supports data transfer rates of 10 Mbps (standard) or 100 Mbps (using the newer 100Base-T version).

The Ethernet specification served as the basis for the IEEE 802.3 standard, which specifies the physical and lower software layers. Ethernet uses the CSMA/CD access method to handle simultaneous demands. It is one of the most widely implemented LAN standards.

OSI Reference Model

Developed by International Standards Organization (ISO) and stands for Open Systems Interconnection (OSI).

It is designed to deal with connecting open systems to communicate with other systems.

It consists of seven layers: a complex structure is partitioned into a number of independent functional layers.

Each layer provides a set of services by performing some well-defined sets of functions. These services are provided by the layered-specific functional entities.

Services at a layer can only be accessed from the layer immediately above it.

Each layer uses only a well-defined set of services provided by the layer below.

Protocols operate between "peer" entities in the different end systems (peer-to-peer protocol rules)

Advantages:

More manageable -Layer N is smaller and built only on Layer (N-1).

Modularity - Different layers can be developed separately and each layer can be modified without affecting other layers as long as the interfaces with immediate layers are kept

Brief Description of model in Each Layer

Physical Layer

The physical layer is responsible for passing bits onto and receiving them from the communication channel.

This layer has no understanding of the meaning of the bits, but deals with the electrical and mechanical characteristics of the signals and signalling methods.

Data Link Layer

Data link layer is responsible for both Point-to-Point Network and Broadcast Network data transmission.

It hides characteristics of the physical layer (e.g. transmission hardware from the upper layers.

It is also responsible to convert transmitted bits into frames

It transmits the frames into an error free transmission line by adding error control and flow control.

Network Layer

Network layer is responsible for the controls of routers and subnets operation.

It also handles the formation and routing of packets from source to destination with congestion control.

Transport Layer

Transport layer is a kind of software protocol to control packets delivery, crash recovery and transmission reliability between sender and receiver.

Multiplexing between transport and network connections is possible.

Session Layer

Session layer provides dialogue control and token management.

Presentation Layer

When data is transmitted between different types of computer systems, the presentation layer negotiates and manages the way data is represented and encoded.

Essentially a 'null' layer in case where such transformations are unnecessary.

Application Layer

This top layer defines the language and syntax that programs use to communicate with other programs. For example, a program in a client workstation uses commands to request data from a program in the server.

Common functions at this layer are opening, closing, reading and writing files, transferring files and e-mail messages, executing remote jobs and obtaining directory information about network resources.

From Process Automation and Control

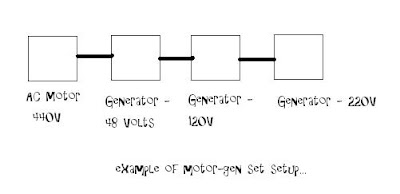

Motor Generator Set (MG set)

Motor Generator Set are a combination of an electrical generator and an engine mounted together to form a single piece of equipment. Motor generator set is also referred to as a genset, or more commonly, a generator.

Motor generator sets are used throughout industry to provide electrical power on demand. Typical applications include emergency power backup applications for the medical industry, remote sites, or any industrial or commercial application requiring an independent or remote power source. Motor generator sets are available in most standard power delivery configurations. Power plant installations include gas turbines, diesel and gas reciprocating engines. Motor generator sets may produce alternating current power, or direct current.

Motor generator set can be customized with a wide range of options. These options include a fuel tank, a engine speed regulator and a generator voltage regulator, simple monitoring electrical devices to more advanced digital controls, wireless communication, automatic starting capabilities, and more.

A motor and one or more generators, with their shafts mechanically coupled, used to convert an available power source to another desired frequency or voltage. The motor of the set is selected to operate from the available power supply; the generators are designed to provide the desired output.

The principal advantage of a motor-generator set over other conversion systems is the flexibility offered by the use of separate machines for each function. Since a double energy conversion is involved, electrical to mechanical and back to electrical, the efficiency is lower than in most other conversion methodsSelecting motor generator set for electrical power generation does require a through understanding of power requirements, installation requirements, regulations, and cost attributes. For AC electrical generator sets, specifications will include AC power rating, and for DC specifications will include DC power, expressed in watts (W). Engine-generators are available in a wide range of power ratings. These include small portable units which can supply several hundred watts of power, up to million watt stationary or trailer-mounted units.

Calibrating HART Transmitters

Introduction This paper assumes that you want to make use of the digital process values that are available from a HART transmitter. However, before examining calibration requirements, we must first establish a basic understanding in two areas: calibration concepts, and the operation of a HART instrument.

Calibration Concepts The ISA Instrument Calibration Series171 defines calibration as "Determination of the experimental relationship between the quantity being measured and the output of the device which measures it; where the quantity measured is obtained through a recognized standard of measurement." There are two fundamental operations involved in calibrating any instrument:

- Testing the instrument to determine its performance,

- Adjusting the instrument to perform within specification

1. Using a process variable simulator that matches the input type of the instrument, set a known input to the instrument.

2. Using an accurate calibrator, read the actual (or reference) value of this input

3 Read the instrument's interpretation of the value by using an accurate calibrator to measure

the instrument output

By repeating this process for a series of different input values, you can collect sufficient data to determine the instrument's accuracy Depending upon the intended calibration goals and the error calculations desired, the test procedure may require from 5 to 2 1 input points The first test that is conducted on an instrument before any adjustments are made is called the As-Found test. If the accuracy calculations from the As-Found data are not within the specifications for the instrument, then it must be adjusted Adjustment is the process of manipulating some part of the instrument so that its input to output relationship is within specification. For conventional instruments, this may be zero and span screws. For HART instruments, this normally requires the use of a communicator to convey specific information to the instrument Often you will see the term calibrate used as a synonym for adjust.

After adjusting the instrument, a second multiple point test is required to characterize the instrument and verify that it is within specification over the defined operating range. This is called the As-Left test It is absolutely essential that the accuracy of the calibration equipment be matched to the instrument being calibrated. Years ago, a safe rule of thumb stated that the calibrator should be an order of magnitude (10 times) more accurate than the instrument being calibrated As the accuracy of field instruments increased, the recommendation dropped to a ratio of 4 to 1. Many common calibrators in use today do not even meet this ratio when compared to the rated accuracy of HART instruments.

Error Calculations

Error calculations are the principal analysis performed on the As-Found and As-Left test data. There are several different types of error calculations, most of which are defined in the publication "Process Instrumentation Terminology ". Al of the ones discussed here are usually expressed in terms of the percent of ideal span which is defined as:

The first step in the data analysis is to convert the engineering unit values for input and output into percent of span Then for each point, calculate the error, which is the deviation of the actual output from the expected output. Table 1 gives a set of example data and error calculations, while Figure 1 graphically illustrates the resulting errors.

It is important to note that the number of data points collected and the order in which they are collected both affect the types of error calculations that can be performed. The maximum error is the most common value used to evaluate an instrument's performance. If a computer program is not used to analyze the test data, it is often the only error considered and is taken to be the largest deviation from the ideal output. A more accurate value can be obtained by fitting an equation to the error data and calculating the maximum and minimum points on the curve. In practice, this should on1 y be done if there are at least five data points reasonably spaced over the instrument range. Otherwise, it is unlikely that the curve fit will give reasonable values between the data points.

By itself, the maximum error does not give a complete indication of an instrument's performance. With the availability of computer software to facilitate calculations, other error values are gaining popularity including zero error, span error, linearity error, and hysteresis error.

Zero error is defined as the error of a device when the input is at the lower range value. Span error is defined as the difference between the actual span and the ideal span, expressed as a percentage of the ideal span.

Linearity error is a measure of how close the error of the instrument over its operating range approaches a straight line. Unfortunately. there are three different methods used to calculate this, resulting in an independent linearity. a terminal based linearity, and a zero based linearity. In practice, it is best to choose one method and apply it consistently. Note that the calculation of linearity error is also greatly facilitated by a curve fit of the error data.

Hysteresis error is a measure of the dependence of the output at a given input value upon the prior history of the input. This is the most difficult error to measure since it requires great care in the collection of data, and it typically requires at least 9 data points to develop reasonable curves for the calculations. Thus a technician must collect at least five data point traversing in one direction, followed by at least four more in the opposite direction, so that each leg has five points, including the inflection point.

If any of these errors is greater than or equal to the desired accuracy for a test, then the instrument has failed and must be adjusted.

Performance over time

In order to adequately assess the long term performance of an instrument, it must be calibrated on a regular schedule You may also want to determine how often an instrument really needs to be calibrated by examining its change in performance over time. To do this, you must collect both As-Found and As-Left data. By comparing the As-Left data from one calibration to the As-

Found of the next calibration. you can determine the instrument's drift. By varying the interval between calibrations, you can determine the optimum calibration interval which allows the instrument to remain within the desired accuracy.

HART Fundamentals

HART is an acronym for Highway Addressable Remote Transducer. Originally developed by Rosemount in 1986, the HART protocol is now a mature, industry-proven technology. Because Rosemount chose to make HART an open protocol, many manufacturers incorporated it into their products. It has now become a de facto standard for field communication with instruments In 1993, to insure its continued growth and acceptance, Rosemount transferred ownership of the protocol to the HART Communication Foundation (HCF).

HART products generally fall into one of three categories: field devices, host systems, and communication support hardware. Field devices include transmitters, valves, and controllers. There are HART transmitters for almost any standard process measurement including pressure,

temperature, level, flow, and analytical (pH. ORP. density). Host systems range from small hand-held communicators to PC based maintenance management software to large scale distributed control systems. Communication support hardware includes simple single loop modems as well as an assortment of multiplexers that allow a host system to communicate with a large number of field devices. In this paper, the general term "communicator" will be used to refer to any HART host that can communicate with a field device.

HART is a transition technology that provides for the continued use of the industry standard 4 - 20 mA current loop while also introducing many of the capabilities and benefits associated with a digital field bus system. The complete technical specification is available from the HART Communication Foundation. HART follows the basic Open Systems Interconnection (OSI) reference model. The OSI model describes the structure and elements of a communication system. The HART protocol uses a reduced OS1 model, implementing only layers 1, 2 and 7. Since the details for layers I (Physical) and 2 (Link) do not directly impact calibration, they are not discussed here. Layer 7, the Application layer, consists of three classes of HART commands: Universal, Common Practice, and Device Specific. Universal commands are implemented by all HART hosts and field devices. They are primarily used by a host to identify a field device and read process data.

The Common Practice command set defines functions that are generally applicable to many field

devices. This includes items such as changing the range, selecting engineering units, and performing self tests. Although each field device implements only those Common Practice commands which are pertinent to its operation, this still provides for a reasonable level of commonality between field devices.

Device Specific commands are different for each field device. It is through these commands that unique calibration and configuration functions are implemented. For example, when configuring an instrument for operation, only temperature transmitters need to be able to change the type of

probe attached, while flow meters often need to have information about pipe sizes, calibration factors, and fluid properties. Also, the calibration procedure for a pressure transmitter isobviously different than that for a valve.

It is important to note that in most cases, proper calibration of a HART instrument requires the

use of a communicator that is capable of issuing device specific commands.

Calibrating a Conventional Instrument

For a conventional 4-20 mA instrument, a multiple point test that stimulates the input and measures the output is sufficient to characterize the overall accuracy of the transmitter. The normal calibration adjustment involves setting only the zero value and the span value, since there is effectively only one adjustable operation between the input and output as illustrated below.

This procedure is often referred to as a Zero and Span Calibration. If the relationship between the input and output range of the instrument is not linear, then you must know the transfer function before you can calculate expected outputs for each input value. Without knowing the expected output values, you cannot calculate the performance errors.

This procedure is often referred to as a Zero and Span Calibration. If the relationship between the input and output range of the instrument is not linear, then you must know the transfer function before you can calculate expected outputs for each input value. Without knowing the expected output values, you cannot calculate the performance errors.Calibrating a HART Instrument

The Parts of a HART Transmitter

For a HART instrument, a multiple point test between input and output does not provide an accurate representation of the transmitter's operation. Just like a conventional transmitter, the measurement process begins with a technology that converts a physical quantity into an electrical signal. However, the similarity ends there, lnstead of a purely mechanical or electrical path between the input and the resulting 4-20 mA output signal, a HART transmitter has a microprocessor that manipulates the input data. As shown in Figure 4, there are typically three calculation sections involved, and each of these sections may be individually tested and adjusted.

Just prior to the first box, the instrument's microprocessor measures some electrical property that is affected by the process variable of interest. The measured value may be millivolts, capacitance, reluctance, inductance, frequency, or some other property. However, before it can be used by the microprocessor, it must be transformed to a digital count by an analog to digital (AD) converter.

Just prior to the first box, the instrument's microprocessor measures some electrical property that is affected by the process variable of interest. The measured value may be millivolts, capacitance, reluctance, inductance, frequency, or some other property. However, before it can be used by the microprocessor, it must be transformed to a digital count by an analog to digital (AD) converter.In the first box, the microprocessor must rely upon some form of equation or table to relate the raw count value of the electrical measurement to the actual property (PV) of interest such as temperature, pressure, or flow. The principle form of this table is usually established by the manufacturer, but most HART instruments include commands to perform field adjustments. This is often referred to as a sensor trim. The output of the first box is a digital representation of the process variable. When you read the process variable using a communicator, this is the value that you see.

The second box is strictly a mathematical conversion from the process variable to the equivalent

milliamp representation. The range values of the instrument (related to the zero and span values) are used in conjunction with the transfer function to calculate this value. Although a linear transfer function is the most common, pressure transmitters often have a square root option. Other special instruments may implement common mathematical transformations or user defined break point tables. The output of the second block is a digital representation of the desired instrument output. When you read the loop current using a communicator, this is the value that you see. Many HART instruments support a command which puts the instrument into

a fixed output test mode. This overrides the normal output of the second block and substitutes a specified output value.

The third box is the output section where the calculated output value is converted to a count value that can be loaded into a digital to analog converter. This produces the actual analog electrical signal. Once again the microprocessor must rely on some internal calibration factors to get the output correct. Adjusting these factors is often referred to as a current loop trim or 4- 20 mA trim.

HART Calibration Requirements

Based on this analysis, you can see why a proper calibration procedure for a HART instrument is significantly different than for a conventional instrument. The specific calibration requirements depend upon the application.

If the application uses the digital representation of the process variable for monitoring or control, then the sensor input section must be explicitly tested and adjusted. Note that this reading is completely independent of the milliamp output, and has nothing to do with the zero or span settings. The PV as read via HART communication continues to be accurate even when it is outside the assigned output range. For example, a range 2 Rosemount 305 1 c has sensor limits of -250 to +250 inches of water. If you set the range to 0 - 100 inches of water, and then apply a pressure of 150 inches of water, the analog output will saturate at just above 20 milliamps. However, a communicator can still read the correct pressure. If the current loop output is not used (that is the transmitter is used as a digital only device), then the input section calibration is all that is required. If the application uses the milliamp output, then the output section must be explicitly tested and calibrated. Note that this calibration is independent of the input section, and again, has nothing to do with the zero and span settings.

Calibrating the Input Section

The same basic multiple point test and adjust technique is employed, but with a new definition for output. To run a test, use a calibrator to measure the applied input, but read the associated output (PV) with a communicator. Error calculations are simpler since there is always a linear relationship between the input and output, and both are recorded in the same engineering units. In general, the desired accuracy for this test will be the manufacturer's accuracy specification. If the test does not pass, then follow the manufacturer's recommended procedure for trimming the input section. This may be called a sensor trim and typically involves one or two trim points. Pressure transmitters also often have a zero trim, where the input calculation is adjusted to read exactly zero (not low range). Do not confuse a trim with any form of re-ranging or any procedure that involves using zero and span buttons.

Calibrating the Output Section

Again, the same basic multiple point test and adjust technique is employed, but with a new

definition for input. To run a test, use a communicator to put the transmitter into a fixed current

output mode. The input value for the test is the mA value that you instruct the transmitter to produce. The output value is obtained using a calibrator to measure the resulting current. This test also implies a linear relationship between the input and output, and both are recorded in the same engineering units (milliamps). The desired accuracy for this test should also reflect the manufacturer's accuracy specification.

If the test does not pass, then follow the manufacturer's recommended procedure for trimming the output section. This may be called a 4-20 mA trim, a current loop trim, or a DIA trim. The trim procedure should require two trim points close to or just outside of 4 and 20 mA. Do not confuse this with any form of re-ranging or any procedure that involves using zero and span buttons.

Testing Overall Performance

After calibrating both the Input and Output sections, a HART transmitter should operate correctly The middle block in Figure 4 only involves computations. That is why you can change the range, units, and transfer function without necessarily affecting the calibration. Notice also that even if the instrument has an unusual transfer function, it only operates in the conversion of the input value to a milliamp output value, and therefore is not involved in the testing or calibration of either the input or output sections.

If there is a desire to validate the overall performance of a HART transmitter, run a Zero and Span test just like a conventional instrument. As you will see in a moment. however, passing this test does not necessarily indicate that the transmitter is operating correctly.

Effect of Damping on Test Performance

Many HART instruments support a parameter called damping. If this is not set to zero, it can have an adverse effect on tests and adjustments. Damping induces a delay between a change in the instrument input and the detection of that change in the digital value for the instrument input reading and the corresponding instrument output value. This damping induced delay may exceed the settling time used in the test or calibration. The settling time is the amount of time the test or calibration waits between setting the input and reading the resulting output. It is advisable to adjust the instrument's damping value to zero prior to performing tests or adjustments. After calibration, be sure to return the damping constant to its required value.

Operations that are NOT Proper Calibrations

Digital Range Change

There is a common misconception that changing the range of a HART instrument by using a communicator somehow calibrates the instrument. Remember that a true calibration requires a reference standard, usually in the form of one or more pieces of calibration equipment to provide an input and measure the resulting output. Therefore, since a range change does not reference any external calibration standards, it is really a configuration change, not a calibration. Notice that in the HART transmitter block diagram (Figure 4), changing the range only affects the second block. It has no effect on the digital process variable as read by a communicator.

Zero and Span Adjustment

Using only the zero and span adjustments to calibrate a HART transmitter (the standard practice associated with conventional transmitters) often corrupts the internal digital readings. You may not have noticed this if you never use a communicator to read the range or digital process data. As shown in Figure 4, there is more than one output to consider. The digital PV and milliamp values read by a communicator are also outputs, just like the analog current loop.

Consider what happens when using the external zero and span buttons to adjust a HART instrument. Suppose that an instrument technician installs and tests a differential pressure transmitter that was set at the factory for a range of 0 to 100 inches of water. Testing the transmitter reveals that it now has a 1 inch of water zero shift. Thus with both ports vented (zero), its output is 4.16 mA instead of 4 00 mA, and when applying 100 inches of water, the output is 20.16 mA instead of 20.00 mA. To fix this he vents both ports and presses the zero button on the transmitter. The output goes to 4.00 mA, so it appears that the adjustment was successful

However, if he now checks the transmitter with a communicator, he will find that the range is 1 to 101 inches of water, and the PV is 1 inch of water instead of 0. The zero and span buttons changed the range (the second block). This is the only action that the instrument can take under these conditions since it does not know the actual value of the reference input. Only by using a digital command which conveys the reference value can the instrument make the appropriate internal adjustments.

The proper way to correct a zero shift condition is to use a zero trim. This adjusts the instrument

input block so that the digital PV agrees with the calibration standard. If you intend to use the digital process values for trending, statistical calculations, or maintenance tracking, then you should disable the external zero and span buttons and avoid using them entirely.

Loop Current Adjustment

Another observed practice among instrument technicians is to use a hand-held communicator to adjust the current loop so that an accurate input to the instrument agrees with some display device on the loop. If you are using a Rosemount model 268 communicator, this is a "current loop trim using other scale." Refer again to the zero drift example just before pressing the zero button. Suppose there is also a digital indicator in the loop that displays 0.0 at 4 mA, and 100.0 at 20 mA. During testing, it read 1.0 with both ports vented, and it read 101.0 with 100 inches of water applied. Using the communicator, the technician performs a current loop trim so that the display reads correctly at 0 and 100, essentially correcting the output to be 4 and 20 mA respectively.

While this also appears to be successful, there is a fundamental problem with this procedure. To begin with, the communicator will show that the PV still reads 1 and 101 inches of water at the test points, and the digital reading of the mA output still reads 4.16 and 20.16 mA, even though the actual output is 4 and 20 mA. The calibration problem in the input section has been hidden by introducing a compensating error in the output section, so that neither of the digital readings agrees with the calibration standards.

Conclusion

While there are many benefits to be gained by using HART transmitters, it is essential that they be calibrated using a procedure that is appropriate to their function. If the transmitter is part of an application that retrieves digital process values for monitoring or control, then the standard calibration procedures for conventional instruments are inadequate. At a minimum, the sensor input section of each instrument must be calibrated. If the application also uses the current loop output, then the output section must also be calibrated.

References

[I] HART Communication Foundation. "HART - Smart Communications Protocol Specification",

Revision 5.2, November 3, 1993.

[2] Bell System Technical Reference. PUB 4121 2, "Data Sets 202s and 202T interface

Specification", July 1976.

[3] HART Communication Foundation Pamphlet. "HART Field Communications Protocol".

[4] Holladay, Kenneth L., "Using the HART@ Protocol to Manage for Quality", ISA 1994 paper

number 94-6 17.

[5] Applied System Technologies, Inc., "User's Manual for Cornerstone Base Station", Revision

2.0.1 I , April 1995.

[6] ANSIIISA - S51 .l-1979, "Process Instrumentation Terminology"

[7] Instrument Society of America, "Instrument Calibration Series - Principles of Calibration".

1989.

[8] Instrument Society of America, "Instrument Calibration Series - Calibrating Pressure and

Temperature Instruments", 1989.

HART: The Tutorial

HIGHWAY ADDRESSABLE REMOTE TRANSDUCER

The HART® protocol is a powerful communication technology used to realise the full potential of digital field devices whilst preserving the traditional 4-20mA signal. The HART® protocol extends the system capabilities for two way digital communication with smart instruments.

HART® offers the best solution for smart field device communications and has the widest base of support of any field device protocol worldwide. More instruments are available with the HART® protocol than any other digital communications technology. Almost any process application can be addressed by one of the products offered by HART® instrument suppliers. Unlike other digital communication methods the HART protocol gives a unique communication solution that it is backward compatible with currently installed instrumentation. This ensures that investments in existing cabling and current control strategies remain secure into the future.

The HART® digital signal is superimposed onto the standard 4-20mA signal. It uses Bell 202 standard Frequency Shift Keying (FSK) signal to communicate at 1200 baud. The digital signal is made up of two frequencies, 1200Hz and 2200Hz, representing bits 1 and 0 respectively. Sine waves of these two frequencies are superimposed onto the analogue signal cables to give simultaneous analogue and digital communications. As the average value of the FSK signal is always zero there is no effect on the 4-20mA analogue signal. A minimum loop impedance of 230ohms is required for communication.

HART® is a master-slave protocol - this means that a field device only replies when it is spoken to. Up to two masters can connect to each HART® loop. The primary master is usually the DCS (Distributed Control System), the PLC (Programmable Logic Controller) or a PC. The secondary master can be a hand held configurator or another PC running an instrument maintenance software package. Slave devices include transmitters, actuators and controllers that respond to commands from the primary or secondary master.

The digital communication signal has a response time of approx. 2-3 updates per second without interrupting the analogue signal.

HART® Commands

The HART® protocol provides uniform and consistent communication for all field devices via the HART® command set. This includes three types of Command:

Universal

All devices using the HART® protocol must recognise and support these commands. They provide access to information useful in normal operations.

Common Practice

These provide functions implemented by many but not all HART® communication devices.

Device specific

These represent functions that are unique to each field device. They access set up and calibration information as well as information on the construction of the device.

The HART® Communication Protocol is an open standard owned by more than 100 member companies in the HART® Communication Foundation HCF. The HCF is an independent, non-profit organisation, which provides worldwide support for application of the technology and

ensures that the technology is openly available for the benefit of the industry.

Infrared technology targets industrial automation

By Andrew Wilson

Infrared technology targets industrial automation IR cameras are used in industrial-plant monitoring and more and more in industrial automation.

By Andrew Wilson, EditorEnglish astronomer Sir William Hershel is credited with the discovery of infrared (IR) radiation in 1800. In his first experiment, Hershel subjected a liquid in a glass thermometer to different colors of the spectrum. Finding that the hottest temperature was beyond red light, Hershel christened his newly found energy "calorific rays," now known as infrared radiation.

Two centuries later, IR imagers and cameras are finding uses in applications from missile guidance tracking to plant monitoring to machine-vision automation systems. Invisible to the human eye, IR energy can be divided into the three spectral regions: near-, mid-, and far-IR, with wavelengths longer than that of visible light. Although the boundaries between these are undetermined, the wavelength ranges are approximately 0.7 to 5 µm (near-IR), 5 to 40 µm (mid-IR), and 40 to 350 µm (far-IR).

However, do not expect today's commercially available IR detectors or cameras to span such large wavelengths. Rather, they will be specified as covering more narrow bandwidths between approximately 1 and 20 µm. Many manufacturers may use the terms near, mid-, and far-IR loosely, often claiming that their 9 µm-capable camera is based on a far-IR-based sensor.

Absolute measurement

For the system developer considering an IR camera for process-monitoring applications, the choice of detector will be both manufacturer- and application-specific. Because of this, the systems integrator must gain an understanding of how and what is being measured.

Perhaps one of the largest misconceptions is that IR measures the temperature of an object. This misconception results from Plank's law, which states that all objects with a temperature above absolute zero emit IR radiation and that the higher the temperature the higher the emitted intensity. Plank's law, however, is only true for blackbody objects that have 100% absorption and maximum emitting intensity. In reality, a ratio of the emitting intensity of the object and a corresponding blackbody with the same temperature must be used. This emissivity—the measure of how a material absorbs and emits IR energy—affects how images are interpreted.

In the design of its MP50 linescan process imager, Raytek (Santa Cruz, CA, USA) incorporates a reference blackbody for continuous calibration (see Fig. 1). Targeted at continuous-sheet and web-based processes, the scanner offers a 48-line/s scan speed and is offered in a number of versions capable of capturing spectral ranges useful for examining plastics, glass, and metals. Other manufacturers offer blackbodies as accessories that can externally calibrate their cameras.

Since people cannot see IR radiation, the images captured by IR detectors and cameras must first be processed, translated, and pseudocolored into images that can be visualized. In these images, highly reflective materials may appear different from less-reflective materials, even though their temperature is the same. This is because highly reflective materials will reflect the radiation of the objects around them and therefore may appear to be "colder" than less-reflective materials of the same temperature.

Material properties

In considering whether to use IR technology for any particular application, therefore, the properties of the materials being viewed must be known to properly interpret the image. In printed-circuit-board analysis, for example, the emissivity of different metals can be used to discern faults in the board. However, if the emissivity of materials is similar, it may be difficult to discern any differences in the image.

In many applications, including target tracking, this does not pose a problem. In heat-seeking missiles, for example, the difference between the emissivity of aluminium alloy used to build a rocket and the fire that emerges from its boosters is so high that discerning the two is relatively simple. In other applications, the task may be more complex.

Infrared cameras use a number of different detector types that can be broadly classified as either photon or thermal detectors. Infrared absorbed by photon-based detectors generates electrons or bandgap transitions in materials such as mercury cadmium telluride (HgCdTe; detecting IR in the 3- to 5- and 8- and 12-µm range) and indium antinomide (InSb; detecting IR in the 3- to 5-µm range). This results in a charge that can be directly measured and read out for preprocessing.

Rather than generate charge or bandgap transitions directly, thermal detectors absorb the IR radiation, raising the temperature of single or multiple membrane-isolated temperature detectors on the device. Unlike photon-based detectors, thermal detectors can be operated at room temperature, although their sensitivity and response time are longer.

To create a two-dimensional IR image, camera vendors incorporate focal-plane, or staring, arrays into their cameras. These detectors are similar in concept to CCDs in that they are offered in arrays of pixels that can range from as low as 2 x 2 to 640 x 512 formats and higher, often with greater than 8 bits of dynamic range.

Incorporating a thermal detector in the form of an amorphous silicon or vanadate (YVO4) microbolometer, the Eye-R320B from Opgal (Karmiel, Israel) features a 320 x 240 FPA. With a spectral range from 8 to 12 µm, the camera also offers automatic gain correction, remote RS422 programmability, and CCIR or RS170 output. The company also offers embeddable IR camera modules that can use a number of 640 x 480-based detectors from different manufacturers.

Discerning features

As the wavelength of visible light is shorter than that of IR radiation, visible light can discern features within an image at higher resolution. For this reason ultraviolet (UV) radiation, which the human eye also cannot perceive, is used in to detect submicron defects in semiconductor wafers. Because the frequency of UV light is higher, the spatial resolution of the optical system is also higher, allowing greater detail to be captured.

Unfortunately, quite the opposite is true of IR radiation. With a lower frequency than visible light, IR radiation will resolve fewer line pairs/millimeter than visible light, given that all other system parameters are equal. Indeed, it is this diffraction-limited nature of optics that leads to the large pixel sizes of IR imagers. And, of course, an IR imager with 320 x 240 format and a pixel pitch of 30 µm will have a die size considerably larger than its 320 x 240 CCD counterpart with a 6-µm pixel pitch. This larger die size for any given format is another reason IR imagers are more expensive than visible imagers.

In many visible machine-vision applications, it is necessary to determine the minimum spatial resolution required by the system. And the same applies when determining whether an IR detector can be used in such an application. This is accomplished visibly by using test charts with periods of white and black lines. If, for example, the required resolution were 125 line pairs/mm, then the pitch of those line pairs would be 8 µm. From Nyquist criteria, it can be determined that the most efficient way to sample the signal is with a 4-µm pixel pitch. A smaller pitch will not add new information, and a larger pitch will result in errors.

In such optical systems the pixel pitch at the limit of resolution is given by the diffraction-limited equation

where f/# equals the focal length/aperture ratio of the lens. Thus, a pixel pitch of 2.68 µm is needed to resolve a 550-nm visible frequency at f/8. In an IR system, with a wavelength of 5 µm and the same focal length/aperture ratio, the pixel pitch required will be approximately 25 µm or nine times larger. With a 25-µm pixel pitch, the minimum number of line pairs/millimeter that can be resolved will have a 50-µm period, which equates to approximately 2 line pairs/mm with an f/1.8 lens.

Luckily, most camera manufacturers specify these parameters. The Stinger IR camera from Ircon (Niles, IL, USA), for example, is specified with an uncooled 320 x 240 FPA, spectral ranges of 5, 8, and 8 to 14 µm, a detector element size of 51 x 51 µm, and an f/1.4 lens (see Fig. 2). The company's literature states that targets as small as 0.017 in. can be measured with the camera, a fact that can be confirmed by some simple mathematics.

To increase this resolution, some manufacturers use lenses with larger numerical apertures (smaller f#s). Because glass is opaque to IR radiation, these lenses are usually fabricated from exotic materials such as zinc selenide (ZnSe) or germanium (Ge), adding to the cost of the camera. Like visible solid-state cameras, IR cameras are generally offered with both linescan and area format arrays. While linescan-based cameras are useful in IR web inspection, area-array-based cameras can capture two-dimensional images. Outputs from these cameras are also similar to visible camera and are generally standard NTSC/PAL analog formats or FireWire and USB-based or digital formats.

Future developments

What has, in the past, stood in the way of acceptance of IR techniques in machine-vision systems has been the cost of IR systems compared with their visible counterparts and the lack of an easy way to combine the benefits of both wavelengths in low-cost systems. In the past few years, however, the cost of IR imaging has been lowered by the introduction of smart IR cameras that include on-board detectors, processors, embedded software, and standard interfaces. And, realizing the benefits of a combined visible/IR approach, manufacturers are now starting to introduce more sophisticated imagers that can simultaneously capturing visible and near-IR images.

Recently, Indigo Systems (Goleta, CA, USA) announced a new method for processing indium gallium arsenide (InGaAs) to enhance its short-wavelength response. The new material, VisGaAs, is a broad-spectrum substance that enables both near-IR and visible imaging on the same photodetector. According to the company, test results indicate VisGaAs can operate in a range from 0.4 to 1.7 µm. To test the detector, the company mounted a 320 x 256 FPA onto its Phoenix camera-head platform and imaged a hot soldering gun in front of a computer monitor (see Fig. 3). The results clearly show that a standard InGaAs camera can detect hardly any radiation from the CRT, while the VisGaAs-based imager can clearly detect both features.

Camera and frame grabber team up to combat SARS

To restrict the spread of severe acute respiratory syndrome (SARS), Land Instruments International (Sheffield, UK; www.landinst.com) has developed a PC-based system that detects elevated body temperatures in large numbers of people. Because individuals with SARS have a fever and above-normal skin temperature, infrared cameras can analyze and detect the viral illness.

Land Instrument's Human Body Temperature Monitoring System (HBTMS) uses the company FTI Mv Thermal Imager with an array of 160 × 120 pixels to capture a thermographic image of a human body (typically the face) at a distance of 2 to 3 m. Data captured are then compared with a 988 blackbody furnace calibration source from Isothermal Technology (Isotech, Southport, UK; www.isotech.co.uk). Permanently positioned in the field of view of the imager, this calibrated temperature reference source is set at 38°C and provides a reference area in the live image scene. The imager is then adjusted to maintain this reference area at a fixed radiance value (200).

To capture images from the FTI Mv, the camera is coupled to a Universal Interface Box (UIB), which drives the imager, images, and imager control from a PC up to 1000 m away. Video and RS422 control signals are then transmitted to the PC from the UIB. IR images are transmitted as an analog video signal via the UIB and digitized by a PC-based MV 510 frame grabber from MuTech (Billerica, MA, USA; www.mutech.com), which transfers digital data to PC memory or VGA display. Because the board offers programmable gain and offset control functionality, the incoming video signal can be adjusted for the maximum digitization range of the camera.

Once the image has been acquired, it is analyzed by Land's image-processing software that displays the images, triggers alarms via a digital output card, and records images to disk. Any pixels in this area with radiance greater than the set threshold trigger an alarm output. To highlight individuals who may have the disease, a monochrome palette is used, with any pixel on the scene with radiance levels above the threshold highlighted in red.

Land Instruments has developed a PC-based system around its FTI Mv Thermal Imager, a PC-based frame grabber and proprietary software (top). In operation, captured images are compared with a fixed radiance value, and pixels with radiance levels higher than this value are highlighted in red (bottom).

Photosensors...

In the previous company i was connected with, a great deal of photosensors or photoswitches are used to detect workpiece presence. There were of different models and shapes. Despite their different physical appearance, almost all follow one principle of operation.

A photosensor is an electronic component that detects the presence of visible light, infrared transmission (IR), and/or ultraviolet (UV) energy. Most photosensors consist of semiconductor having a property called photoconductivity , in which the electrical conductance varies depending on the intensity of radiation striking the material.

The most common types of photosensor are the photodiode, the bipolar phototransistor, and the photoFET (photosensitive field-effect transistor). These devices are essentially the same as the ordinary diode , bipolar transistor , and field-effect transistor , except that the packages have transparent windows that allow radiant energy to reach the junctions between the semiconductor materials inside. Bipolar and field-effect phototransistors provide amplification in addition to their sensing capabilities.

Photosensors are used in a great variety of electronic devices, circuits, and systems, including:

* fiber optic systems

* optical scanners

* wireless LAN

* automatic lighting controls

* machine vision systems

* electric eyes

* optical disk drives

* optical memory chips

* remote control devices

A Quick Guide to Thermocouples

Connections

Visitors

Sponsors

HTTP://WWW.IAMECHATRONICS.COM

Providing Information and Continuing Education for Industrial Automation and Mechatronics Technology